The tokenization process is the backbone of NLP (natural language processing), determining whether your model understands “New York” as a city or as two unrelated words in the same sentence. You’ve probably ended up with a mess of broken phrases, unexpected splits, and a general sense of existential dread if you’ve ever blindly thrown your text into a tokenizer with any expectation of perfect results.

Despite the fact that off-the-shelf tokenization works well for simple tasks, real-world NLP demands Custom Tokenization Pipelines for NLP Models. An ideal pipeline should understand your dataset’s nuances, be able to accommodate linguistic quirks, and not collapse when faced with emojis, URLs, or multilingual text, which is an absolute nightmare. You would be better off programming in Notepad if you think whitespace tokenization is enough.

Understanding the Tokenization Landscape

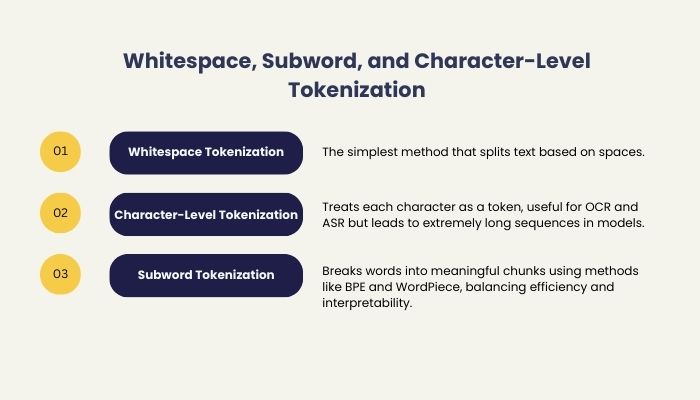

Whitespace, Subword, and Character-Level Tokenization

A tokenization strategy can’t be as simple as splitting on spaces and calling it a day. Lo and behold, it’s much more of a “choose your own disaster” scenario. Whitespace tokenization is the simplest and, frankly, laziest. This approach assumes a neat separation of words by spaces, which is great until you run into contractions, punctuation, or, most of all, languages that do not use spaces (looking at you, Chinese and Japanese).

A character-based tokenization system, on the other hand, is as ineffective as dealing with a toddler who does not understand how to group things. When led by a transformer, every character becomes a token, which makes sense in some applications like OCR and ASR, but when fed to a transformer, it explodes like a hot dog in the oven.

Subword tokenization is another hot technology that aims to make words smarter by breaking them down into meaningful chunks. BPE and WordPiece are the kings of this approach, which chops words into parts that are reusable and easy to interpret. Whenever you wonder why GPT understands “unhappiness” by splitting it into [“un” and “happiness”, you can thank subword tokenization.

The Tragedy of Off-the-Shelf Tokenizers

A pre-trained tokenizer such as Hugging Face, Spacy, or NLTK can help you save time until it doesn’t. These tools are like IKEA furniture: convenient but terribly inflexible when modifying them. The results might be decent, but when you need custom rules for domain-specific text, you’ll have to fight hard coded behaviors that weren’t designed for your data.

If you tokenize financial reports, medical transcripts, or social media posts, you will see the out-of-the-box model cut stock symbols, drug names, and hashtags into meaningless fragments. When you reach a certain point, you realize you’d be better off building your own tokenizer than relying on a prepackaged one.

The Building Blocks of Custom Tokenization

Regex Tokenization – The Hack That Works Until It Doesn’t

Using regular expressions is like putting duct tape on your NLP machine. You can slap together a few patterns and call it a tokenizer, but don’t expect it to last. Structured text is ideal for regex-based tokenization because patterns are predictable – such as log files or command-line output – but human language complicates matters.

You can define a regex pattern that includes URLs, dates, and numbers, but what happens if someone sends you a poorly formatted email address or a tweet with slang? Your regex patterns will rapidly become unreadable abominations of nested brackets and escape characters, and you’ll find yourself debugging them more than writing actual NLP code.

Rule-Based Tokenization – When You Want Full Control

You define logical rules for splitting tokens to handle contractions, preserve named entities, and make sure your tokenizer doesn’t freak out if it sees “Dr. John Smith, Ph.D.” in a sentence. Regex is the equivalent of duct tape, while rule-based tokenization is like a Swiss Army knife.

It’s possible to build rule-based tokenizers with token matchers, exceptions, and custom splitting logic using tools like SpaCy. This is a solid approach when dealing with domain-specific text, but requires constant tweaking. The tokenizer will cheerfully split “it’s” into three separate tokens if an edge case is missed.

Subword Tokenization – Byte Pair Encoding (BPE) and the Wizardry of WordPiece

Why BPE Is Your Frenemy

It’s great for neural network tokenization because it eliminates out-of-vocabulary words while keeping vocabulary size to a minimum. Byte Pair Encoding reduces the number of unknown tokens by dividing words into frequently occurring subword units. In order to train your BPE tokenizer on your dataset, some planning is required.

The size of the vocabulary must be decided, rare words must be handled correctly, and frequent words must not be split in a way that confuses your model. The frustration of watching “Washington” become [“Wash”, “ing”, “ton”] while some obscure medical term persists is indescribable.

WordPiece & Unigram – More Sorcery, Same Headaches

WordPiece is similar to BPE but optimized for language modeling. It splits subwords based on likelihoods, which means it can be more efficient at handling rare words-if you are willing to put up with the added complexity of the algorithm. The Unigram tokenization technique used by SentencePiece takes tokenization one step further by treating it as a probabilistic problem.

It is important to experiment with these methods before they are used with your dataset. If you don’t tune them for your dataset, you could end up with a ridiculous tokenization output in which common words get split unpredictably, making your model about as useful as a pocket calculator in a calculus exam.

Performance Optimization – Because No One Likes a Slow Tokenizer

Speed vs. Accuracy – The Eternal Tradeoff

The problem with fast tokenization is that it often comes at the expense of accuracy. For example, if your tokenizer is too simple, it’s fast but useless, while if it’s too complex, it takes a long time to process even a short document. It is important to identify bottlenecks and profile different tokenization approaches in order to find the right balance.

It is possible to use GPU-accelerated tokenization, but whether it is worth the complexity will depend on your use case. When your pipeline is processing millions of words per second, optimizing tokenization speed is essential. If you don’t, you’ll be staring at loading bars instead of training your model.

Parallel Processing & GPU Acceleration – Because You Deserve Fast Tokenization

By parallelizing the workload, Python’s multiprocessing library can accelerate tokenization. If your tokenizer supports batch processing, it can benefit from running on multiple CPUs.

Deep learning applications can be slowed down by tokenization bottlenecks. Using fast tokenizers like Hugging Face’s tokenizers library (written in Rust) can significantly reduce preprocessing time. You can accelerate your tokenization with TensorFlow or PyTorch, both of which leverage GPU acceleration.

Tokenization Is Hard, But So Are We

Tokenization pipeline development can be frustrating, full of unexpected pitfalls, and require constant refinement for NLP developers. The upside is that when you nail it, you get a tokenizer that works how you want it to, without the constraints of a prepackaged solution.

It is essential to remember that tokenization is as much an art as a science. You don’t have to get it perfect, but it has to be good enough for your purposes. In the unlikely event that you find yourself debugging a regex tokenizer at 2 AM, questioning your life choices, you’re not alone.

Frequently Asked Questions

How to do tokenization in NLP?

Tokenization in NLP : All you need to know

Tokenization is easiest when characters are fed to the model individually. The Python str object is really an array, so we can quickly implement character-level tokenization with just one line of code: text = “Tokenizing text is a core task of natural language processing.”

How to build a BPE tokenizer?

There are two instances of the pair 257 in the text “cat in hat,” with the first one appearing in the beginning, and the second appearing before “hat.”.

In this case it should be replaced and recorded by a new token ID that is not already in use, say 258, and the new text should be: <258>cat in <258>hat. The updated vocabulary is: 0:

How do you create a tokenization?

How to Tokenize An Asset | Chainlink

Select the Asset to Tokenize. The first step is to identify the asset that you want to tokenize. …

Define Token Type. …

Choose the Blockchain You Want to Issue Your Tokens On. …

Select a Third-Party Auditor To Verify Off-Chain Assets. …

Use Chain Link Proof of Reserve To Help Secure the Minting of the Tokens.

Is BERT a tokenizer?

Tokenizing using BERT uses something called subword-based tokenization, in which unknown words are broken down into smaller words so that the model can deduce some meaning from them. For example, ‘boys’ are split into ‘boy’ and ‘s’. Vocabulary generation in BERT is based on the wordpiece algorithm.